Vibe Coding Will Break Your Enterprise

Vibe coding doesn’t solve real problems in enterprise settings — it makes them worse. Lovable, Replit, and their ilk promise instant gratification. But in the world of sprawling systems, audit trails, and regulatory scrutiny, those “vibes” won’t cut it.

While such tools are valuable for rapid prototyping and perhaps isolated greenfield use cases, they are ill-suited for building and operating autonomous AI systems in the enterprise — particularly in the highly regulated, service-oriented architecture of financial services. They are built for startup playgrounds, not bulldozers.

Playground Tools Can’t Run Boardrooms

Build for Service-Oriented Systems, not Web Applications

Platforms like Replit and Lovable are optimized for web-native contexts where applications are self-contained and short-lived. That model works well for hobby projects or lightweight SaaS prototypes, where it’s not catastrophic to accidentally delete the production database. But financial institutions and other enterprises don’t live in that world.

They operate sprawling, distributed systems where services are interconnected, tightly controlled, and weighed down by decades of technical and procedural debt. In these environments, AI development agents must be capable of far more than simply generating code snippets or single-use workflows.

Enterprise-ready AI agents must integrate seamlessly with:

Internal APIs and legacy databases

Identity and access management systems

Compliance gates and regulatory controls

Human-in-the-loop approval workflows

This requires secure service integration, long-lived state, transactional guarantees, observability, and rollback capabilities—all of which are either absent or poorly addressed in “vibe coding” environments.

Don’t Build a New User Interface for Every Workflow

One of the great unlocks of large language models is the unification of user interfaces around natural language. Enterprises should no longer build a new UI for every workflow. Historically, every new capability came with a new interface for employees to learn. Those partitioned interfaces add friction when integrating workflows and create unintended knowledge barriers. That detrimental effect compounds quickly across large organizations.

Chatbots have already demonstrated the versatility of flexible, multi-modal interfaces. But agentic systems go further: they turn natural language into adaptive supervisory interfaces. They help oversee and manage stateful and reliable AI agents. They standardize security, human escalation, monitoring and governance patterns. They grow with the enterprise instead of collapsing under the weight of growth — a coveted outcome that should be a strong imperative at every enterprise.

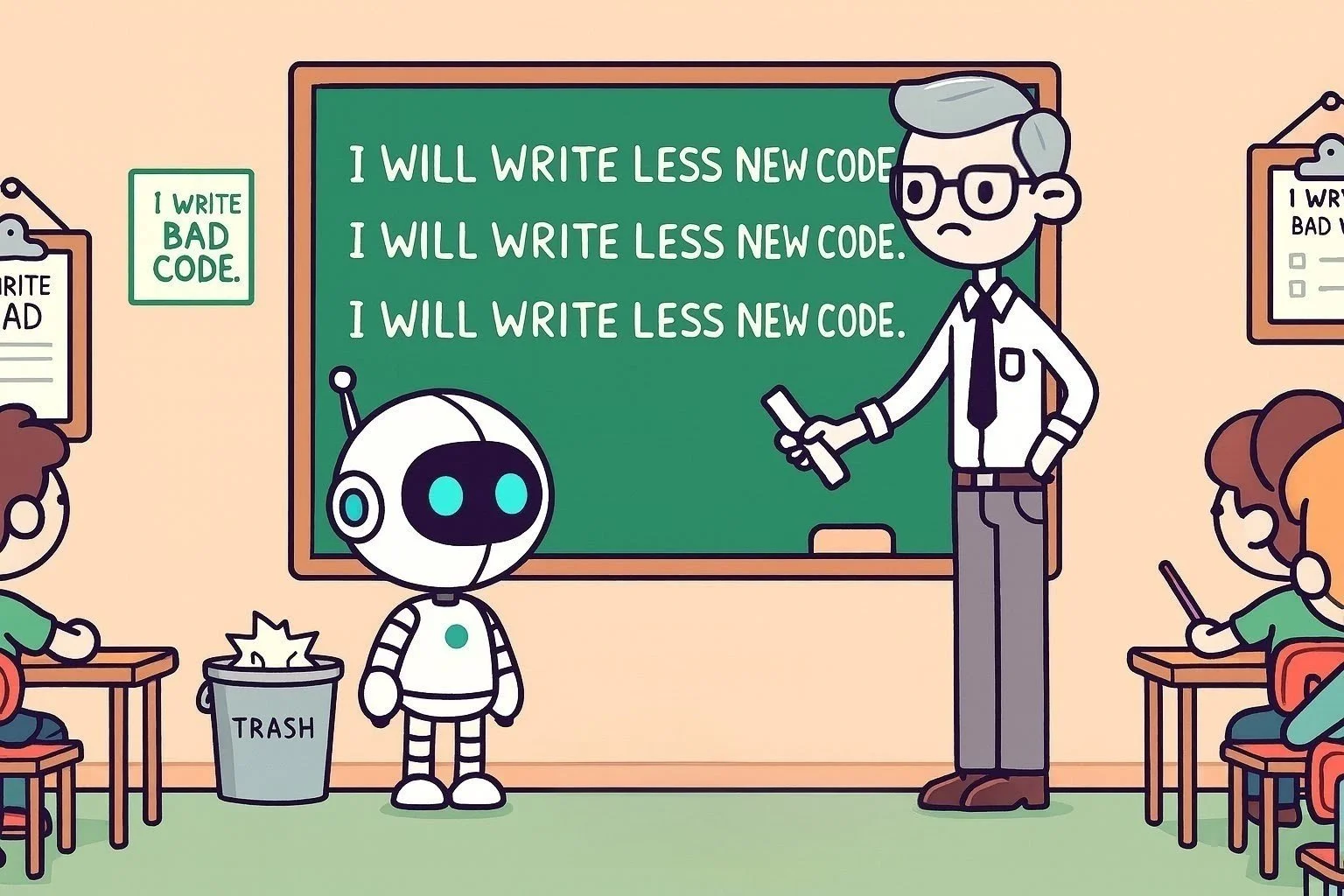

Don’t Generate Code Naïvely; Design Engineered Systems

Enterprise-grade agents are not mere outputs of language model inference. They are products of human-AI collaboration. They are engineered systems that maintain state, reason over long horizons, interact with services, and escalate or defer to human operators based on policy. Treating them as disposable code snippets ignores the realities of enterprise software.

At Morgan Stanley, where I previously chaired the Architecture Committee, we routinely emphasized that only 20% of engineering effort goes into writing new code; 80% is expended on understanding, integrating, maintaining, and securing existing systems. Generative AI developer tools that churn out new code without awareness of the broader architectural landscape don’t accelerate progress — they create a net-negative impact, accruing unbounded technical debt and operational fragility.

More code is NOT better.

An overwhelming majority of effort in enterprise software engineering goes into maintaining existing applications. Generating new applications without sufficient reuse of code is just making that problem exponentially worse.

Even with the new crop of agentic IDEs such as Cursor and Devin, anecdotal evidence inside large enterprises shows a troubling pattern: after only a few cycles of “AI-driven refactoring” of unconstrained code bases, the code ends up rewritten at scale. That code quickly becomes unrecognizable to the software engineers who are ultimately still responsible for its maintenance and reliability. Worse yet, with the help of AI-driven code review and release processes, it could end up in production with disastrous consequences.

This is why we advocate for an agentic development paradigm rooted in code reuse, composition, and integration. Controls are still important in this approach. AI should be used as a force multiplier to reason about and operate within existing systems — not to circumvent them with lightweight implementations that disregard enterprise considerations.

Toward Agentic Builders, Not Just Good Vibes

Enterprise adoption of generative AI will not succeed if it is reduced to prompt-driven code synthesis. In a recent study, researchers drew a clear line: vibe coding is great for ideation, but agentic systems are needed for development at scale. Software engineering has always been about a lot more than just writing code.

The future lies in agentic builders that use LLMs not only to power autonomous operations but also to assist in creating new agents and evolving them. This is a higher-order abstraction than AI-driven code generation: it is AI-driven systems engineering.

At Artian, we have built a platform to make this possible — governed, composable, enterprise-grade multi-agent systems that grow in scope over time and integrate seamlessly with the existing fabric of financial institutions.

AI Autonomy Requires Orchestration and Governance

Vibe coding tools excel at scripting tasks and consumer web applications, not at engineering agents with complex autonomy profiles. At Artian, we define a spectrum of autonomy levels — from AI-assisted workflows to fully autonomous agents under supervisory control. Each level requires differentiated capabilities: secure APIs, memory and state abstraction, concurrency models, entitlements enforcement, compliance controls, audit logging, and run-time policy evaluation.

Our platform is built from first principles to support these needs. We provide a high-level specification that allows agentic workflows to be authored in natural language, but compiled into governed runtime artifacts with deterministic behavior. It is what we call deterministic non-determinism — you choose where exactly you want to leverage the non-deterministic and creative abilities of AI within an overall structured workflow.

Agents are traceable, rollback-capable, and integrate with existing enterprise systems through strongly typed execution adapters. In short: our AI builds and operates agents with the discipline of enterprise engineering.

Conclusion

Vibe coding tools have their place in democratizing software creation and enabling lightweight experiments. But in enterprise contexts where uptime, compliance, auditability, and trust are paramount, they fall short. AI-driven software development is here to stay, but it needs more sophisticated frameworks and better alignment with enterprise requirements to be usable for large-scale systems development. We have published a six-part white paper that dives into the details of Architecting Enterprise AI Agents — submit the form below for your copy.

At Artian, this is exactly what we’ve built: an AI-native enterprise platform where natural language becomes the front end for reusable, auditable, and collaborative agents. Systems that don’t just generate code, but operate within the enterprise fabric to deliver trustworthy, long-lived results.

Because enterprises don’t need vibes. They need outcomes they can trust.